Decentralized Quantum-resistant AI Supercomputing Network

Summary

The development of quantum computers has posed a severe threat to the survival of blockchain technology. Quantum computers, with their high computational power, can easily break the private keys of existing signature algorithms using Shor's algorithm (a cryptanalysis algorithm). This would be a disaster, as all digital assets on the current blockchain would no longer be secure and could be easily stolen.

However, we are not completely helpless in the face of the quantum computing threat. The emergence of post-quantum algorithms (a new generation of cryptographic algorithms that can resist quantum computer attacks on public-key cryptographic algorithms and run on classical computers) provides a solution. This requires replacing the underlying signature algorithm of the blockchain with a post-quantum signature algorithm.

Unavoidable issues: Existing blockchain designs (such as Bitcoin and Ethereum) cannot resist quantum computing attacks by upgrading the signature algorithm, as the digital assets and smart contract data on the current blockchain are authenticated and traded by binding to addresses (account addresses are generated by hashing the public key). The private key, public key, address, assets, and data are uniquely mapped, and it is not possible to replace the public key and address with a post-quantum signature algorithm. Due to the strict binding of addresses and data, it is impossible to migrate blockchain data, and the block data generated based on the signature algorithm (such as ECC) will be untrustworthy. Our only option is to redesign a new underlying post-quantum signature algorithm to form a new quantum-resistant blockchain.

Blockchain Development Trends: In November 2008, Bitcoin emerged as a peer-to-peer electronic cash system primarily used in the financial sector. In late 2013, Ethereum's white paper was released, introducing the Ethereum Virtual Machine (EVM) based on a Turingcomplete virtual machine, expanding blockchain applications beyond the financial sector. Since then, blockchain technology has been developed around business applications, with "ipfs, web3.0, defi, gamefi" being the hot development directions in recent years. In the future, building infrastructure (universal storage services, universal computing services, AI computing power services, etc.) to provide better business application landing platforms will be the mainstream trend in the blockchain industry. The blockchain industry should not only focus on the financial sector but continuously expand into the business application field to integrate into larger markets for sustainable development.

To achieve general storage services, general computing services, and AI supercomputing services, blockchain technology needs to address some technical issues, the most prominent of which is the limitation of scalability that prevents many applications from being deployed. For example, Ethereum, with a transaction speed of about 20 transactions per second, cannot support a large number of application data and interactions due to scalability limitations. Blockchain technology needs better scalability mechanisms. In addition, there are issues with EVM (such as low computing efficiency, closed computing environment, limited memory, and execution mechanisms) that prevent it from being compatible with most application services, and only simple dapps can be executed.

However, with the development of the IPFS protocol, blockchain modularization (solving the scalability problem), and blockchain virtual machines (container technology), the scalability and computing issues of blockchain are being addressed. Decentralized "general storage" and "general computing" can be realized, allowing more applications to be deployed on the blockchain, which is great news for AI. By aggregating computing nodes' GPU computing power through p2p container orchestration, a decentralized AI supercomputing base can be built to support the various applications and model training of AI.

Qoin is a decentralized quantum-resistant AI supercomputing network that uses multiple NIST standard Post-quantum algorithms as the underlying signature algorithm to ensure that blockchain assets are not affected by quantum computer attacks. Based on decentralized network storage technology, modular design, and virtual machine technology, Qoin provides general storage and general computing services to provide a basic computing power platform for AI.

Preface

This document provides an overview of the decentralized quantum-resistant AI supercomputing network, describing the technical construction and application value of the network. It explains the feasibility of the architecture through the technologies used in the system. This is not a standard technical development document and does not provide details on specific APIs, SDKs, or application code. This is a long-term maintenance document and not a final version. This paper contains core descriptions of protocols and ideas, but in response to community feedback and comments, it will add, redefine, and remove various components.

Introduction

The decentralized quantum-resistant AI supercomputing network is a quantum-resistant AI supercomputing network that focuses on decentralized network security and decentralized business applications. It aims to improve AI efficiency by reducing data movement. The blockchain's underlying signature algorithm uses a combination of multi-signature to form a multi-layered quantum-resistant defense. The blockchain system uses a modular architecture to separate the blockchain system, which results in higher scalability, higher security, and the ability to achieve general storage and general computing due to the decoupling of the blockchain system.

Multi-Quantum-Resistant-Signature Scheme: Ensures the security of the blockchain's underlying layer and effectively resists quantum computer attacks, ensuring the security of user transactions and the verification of the authenticity of block synchronization and other processes.

Decentralized AI Supercomputing Network: Aims to build a trustless distributed computing network for Web3, creating infrastructure for AI inference, ML training, analytics, data engineering, data science, and DeSci use cases, addressing challenges related to the accessibility of high-performance computing hardware. The decentralized AI supercomputing network extends unlimited and global access to computing power. It improves the efficiency, transparency, and accessibility of high-performance computing hardware.

Decentralized storage network: provides a storage market where storage resources are traded, and storage providers are made up of free individuals, making it a truly decentralized storage solution. Anyone can be a storage provider by implementing it.

Modular Blockchain: The modular design of the blockchain, while maintaining decentralization, reduces redundant calculations in the network, significantly improving scalability. Since consensus nodes only need to handle the traditional block verification and do not have to spend time executing transactions, the block propagation speed in the network is accelerated.

Blockchain as Services: To facilitate the commercialization of blockchain applications (personal AI micro-training, web3.0 website access, and distributed applications), reduce the barriers to entry for ordinary users, and support application developers, DQASN directly provides integrated services for ordinary users. The DQASN client supports integrated frontend and back-end services.

Decentralized Quantum-resistant AI Supercomputing Network

DQASN is a trustless distributed computing network that is based on blockchain smart contract functionality, allowing developers to directly call any verifiable computation job. Through the outsourcing mechanism of decentralized market computing, users can access verifiable distributed off-chain computing from smart contracts. DQASN will unlock the next generation of Internet-scale models and applications in web3, creating infrastructure for AI inference, ML training, analytics, data engineering, data science, and DeSci use cases.

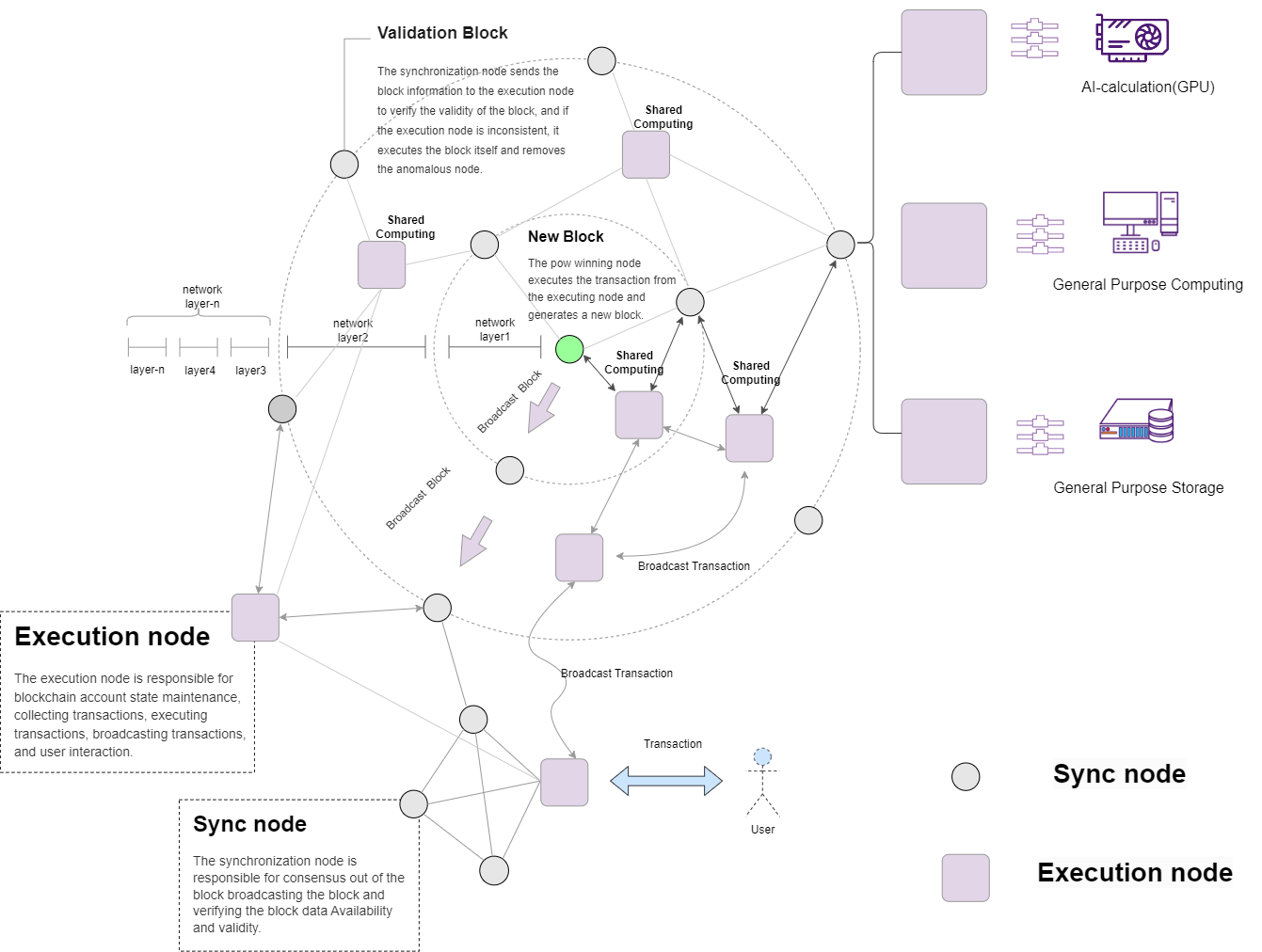

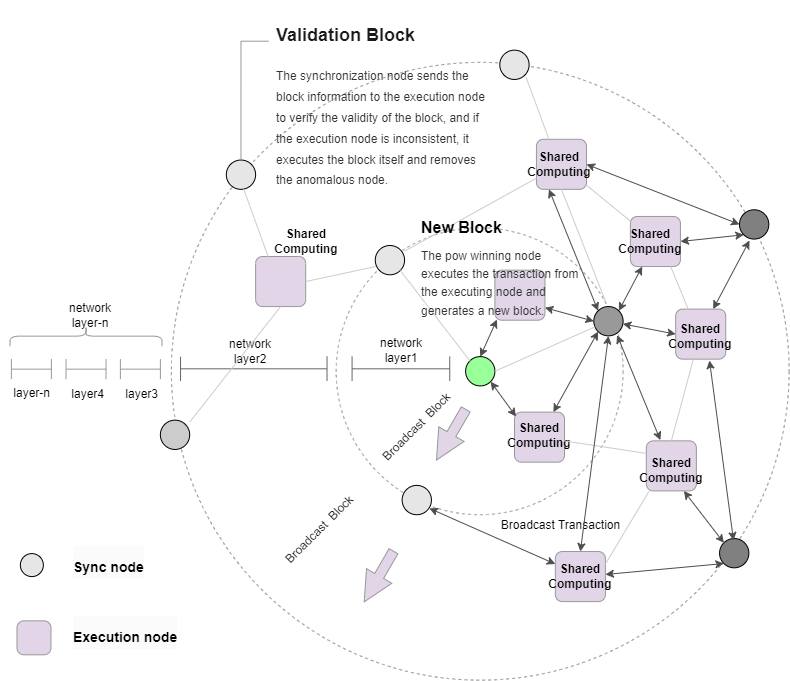

The DQASN modular blockchain system mainly consists of a blockchain synchronization module and a blockchain execution module. The blockchain synchronization module is responsible for generating new blocks, block validation, and synchronizing blocks. The blockchain uses the PQC-POW mechanism to generate new blocks (explained in detail later). The blockchain execution module is responsible for transaction execution, block validation, and account state storage.

DQASN uses smart contracts to implement the application market, providing transaction negotiation, verification, and settlement for computing and storage users, creating an open, collaborative computing ecosystem.

Multi-Quantum-Resistant-signature Security

Post-Quantum Cryptography

Post-quantum cryptography (sometimes called quantum-proof, quantum-safe, or quantumresistant) refers to cryptographic algorithms (usually public-key algorithms) that are believed to be resistant to attacks by quantum computers. Since February 2016, NIST has been soliciting proposals for post-quantum algorithms from around the world. As of November 2023, NIST has released the final winning three encryption algorithms (CRYSTALS-KYBER, CRYSTALS-Dilithium, and SPHINCS+) in the form of FIPS 203, FIPS 204, and FIPS 205, and the standardization draft of the fourth algorithm, FALCON, will be released in 2024. This is the first group of post-quantum encryption standards officially released by NIST after a nearly seven-year process.

Multi-Signatures

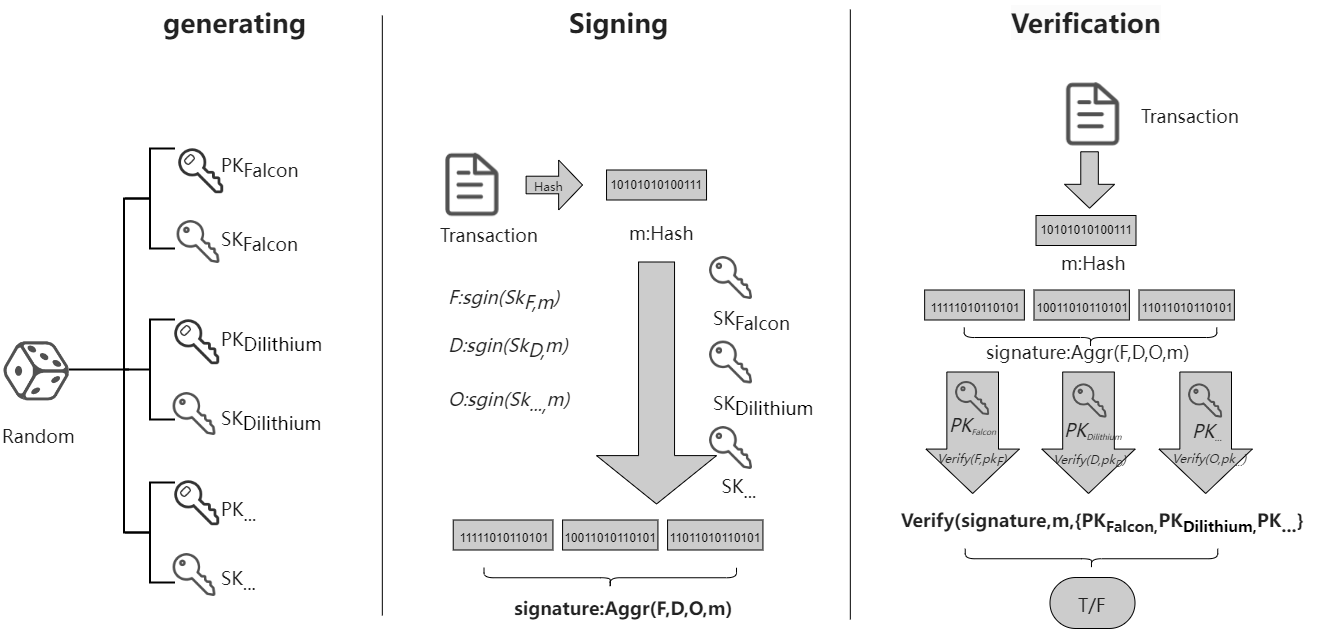

The multi-signature scheme in the DQASN uses two or more post-quantum algorithms to generate key pairs:

(skFalcon, pkFalcon), (skDilithium, pkDilithium) = KeyGen®

Multi-signature: Multiple private key pairs are used to sign transactions

Signature = multiSignatures (sign(skFalcon), sign(skDilithium), m)

Multi-signature verification: In the verification process, multiple public keys are required to verify that the signature is legitimate

True/False = Verify(signature, m, {pkFalcon, pkDilithium})

This is different from the existing blockchain (e.g., BTC, ETH, etc.), which only uses one signature algorithm(ECC: Elliptic Curve Cryptography) to generate multiple private key pairs to sign transactions. The multi-signature of the DQASN is protected by two or more post-quantum signature algorithms, which is more secure. The multi-signature of the DQASN is mainly implemented in the blockchain wallet and blockchain verification nodes. Below is an introduction to the post-quantum signature algorithm:

Falcon

Falcon [1] is an encryption signature algorithm, submitted to the NIST Post-Quantum Cryptography project on November 30, 2017.

Security: A true Gaussian sampler is used internally, which guarantees negligible leakage of key information up to a practically infinite number of signatures (over 2^64).

Compactness: Due to the use of NTRU lattices, signature times are significantly shorter than any lattice-based signature scheme with the same security guarantees, while the public keys are about the same size.

Speed: The use of fast Fourier sampling enables extremely fast implementations, with thousands of signatures per second on a common computer; verification speeds are five to ten times faster.

Scalability: For degree n, the cost is O(n log n), allowing the use of very long-term security parameters at moderate cost.

-

RAM Economy: The enhanced key generation algorithm of Falcon uses less than 30 KB of RAM, a hundredfold improvement over previous designs such as NTRUSign. Falcon is compatible with small, memory-constrained embedded devices.

Performance

Using the reference implementation on a common desktop computer (Intel® Core® i5-8259U at 2.3 GHz, TurboBoostdisabled), Falcon achieves the following performance:

Size (key generation RAM usage, public key size, signature size) is expressed in bytes. Key generation time is given in milliseconds.

| variant | keygen (ms) | keygen (RAM) | sign/s | verify/s | pub size | sig size |

|---|---|---|---|---|---|---|

| FALCON-512 | 8.64 | 14,336 | 5,948.1 | 27,933.0 | 897 | 666 |

| FALCON-1024 | 27.45 | 28,672 | 2,913.0 | 13,650.0 | 1,793 | 1,280 |

Dilithium

Dilithium [2] is a digital signature scheme with strong security under the selection of lattice problems in the hardness of lattice problems. Dilithium is one of the candidate algorithms submitted to the NIST Post-Quantum Cryptography project.

Performance

All benchmarks were obtained on one core of an Intel Core-i7 6600U (Skylake) CPU.The key generation time is given in milliseconds. The signature generation time and verification time are given in microseconds. The signature size is given in bytes.

| Dilithium2 | |||||

|---|---|---|---|---|---|

| Sizes(in bytes) | Skylake cycles (ref) | Skylake cycles (avx2) | |||

| \ | \ | gen: | 300,751 | gen: | 124,031 |

| pk: | 1,312 | sign: | 1,355,434 | sign: | 333,013 |

| sig: | 2,420 | verify: | 327,362 | verify: | 118,412 |

| Dilithium3 | |||||

|---|---|---|---|---|---|

| Sizes(in bytes) | Skylake cycles (ref) | Skylake cycles (avx2) | |||

| \ | \ | gen: | 544,232 | gen: | 256,403 |

| pk: | 1,312 | sign: | 2,348,703 | sign: | 529,106 |

| sig: | 3,293 | verify: | 522,267 | verify: | 179,424 |

| Dilithium5 | |||||

|---|---|---|---|---|---|

| Sizes(in bytes) | Skylake cycles (ref) | Skylake cycles (avx2) | |||

| sk: | \ | gen: | 819,475 | gen: | 298,050 |

| pk: | 2,592 | sign: | 2,856,803 | sign: | 642,192 |

| sig: | 4,595 | verify: | 871,609 | verify: | 279,936 |

Modular Blockchain System Architecture

The existing blockchain architecture binds consistency and validity too tightly, limiting the extensibility and scalability of the blockchain. DQASN adopts a modular design of the blockchain system to improve scalability.

Blockchain System Decoupling

DQASN decouples the blockchain system, using multiple modules to collaborate in running blocks. The decoupling of the blockchain system has the following benefits:

Independent operation: Decoupling makes it easier to build and run a DQASN node, as the system is isolated into separate programs that run independently, reducing the pressure on running nodes.

Security isolation: Different modules in the blockchain system have different security requirements. The blockchain synchronization module requires higher operational security. Decoupling can meet their security and functionality requirements. A good example is separating blockchain processing from data transmission.

Scalability: Independent running modules expand the processing capabilities of the blockchain system. Each module is designed with dedicated functions, allowing the synchronization module to focus on blockchain consensus and security, while the execution module focuses on transaction execution. This allows the synchronization subsystem to share the results of transaction execution, reducing the block network synchronization time.

Security Model

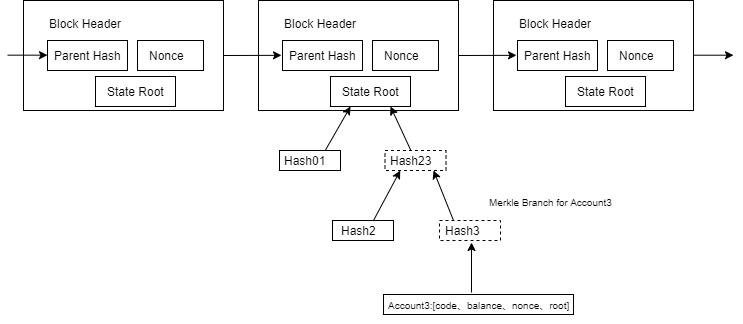

Account state sampling:

The blockchain system node consists of a consensus client and an execution client. Because the two clients are separated and connected by a network, there is a trust issue. In the process of verifying the authenticity of a block, the consensus client must request the state data from the execution client. If the execution client is malicious, the consensus client cannot verify the authenticity of the block. To ensure that the execution client provides real data, the consensus client uses account state sampling to verify the state data of the execution client.

Account state sampling is done by randomly selecting accounts from the transaction pool to obtain the account state and Merkle path of the execution client, and verifying the authenticity of the account state by comparing State Root. Since the account state is randomly sampled, the execution client cannot prepare the account state and must provide a complete and truthful account state tree.

Consensus layer:

Synchronization nodes provide the implementation of the consensus mechanism, generate new blocks based on the PQC-POW algorithm, and judge the availability and validity of data through the state transition results of multiple execution nodes. When the state results of the execution nodes are inconsistent, the synchronization node will locally execute the transaction to confirm the correct state, and remove the execution nodes that provide invalid states. Synchronization nodes only store block data and not account state, so "account state random sampling" is used to verify the user state obtained from the execution node.

Execution layer:

The execution client is responsible for the storage of blockchain account state and can execute transactions to update user state. It provides shared computing for the consensus network to reduce the redundant calculations in the block confirmation process and accelerate block confirmation. Since the execution layer is responsible for the storage of the blockchain state, the storage pressure of the consensus node is reduced, which can obtain better decentralized security and improve the scalability of the block. The client is also responsible for collecting transactions, broadcasting transactions, executing transactions, and providing services to the consensus layer. The client interacts with the user, providing transaction, storage, and application development deployment functions.

Shared Computing

The block propagation time in the network is mainly limited by network bandwidth and node computing power. The execution layer network node can provide shared computing for the consensus layer node to reduce the network bandwidth pressure. The consensus node generates new blocks through the proof of work mechanism and broadcasts them to the execution layer network nodes. The execution nodes perform verification and state synchronization. When the synchronization node receives a new block, it only needs to confirm the correctness of the block to the execution layer network, without consuming computing resources.

Block broadcast

After a new block is generated by a consensus node in the first layer network, it is broadcast to the consensus nodes and surrounding execution nodes in the second layer network. When the second layer network consensus nodes verify the block, they can directly obtain the execution state from the first layer network execution nodes for verification. Similarly, there are common execution clients between the second layer consensus network and the third layer consensus network, so the consensus network can reduce the execution steps and speed up block synchronization.

Consensus security

Consensus nodes will verify blocks to surrounding different nodes. As long as an execution node returns an invalid response, the consensus node will locally verify the block validity, so as long as there is a certain number of honest clients in the network, consensus security can be guaranteed.

Application Market

DQASN has two markets: the general storage market and the general computing market. The general storage market allows customers to pay storage vendors to store data. The general computing market allows customers to pay computing vendors to run computing tasks. In both cases, customers and vendors can set their bid and ask prices or accept the current prices. The network guarantees that vendors receive rewards from customers when they provide services.

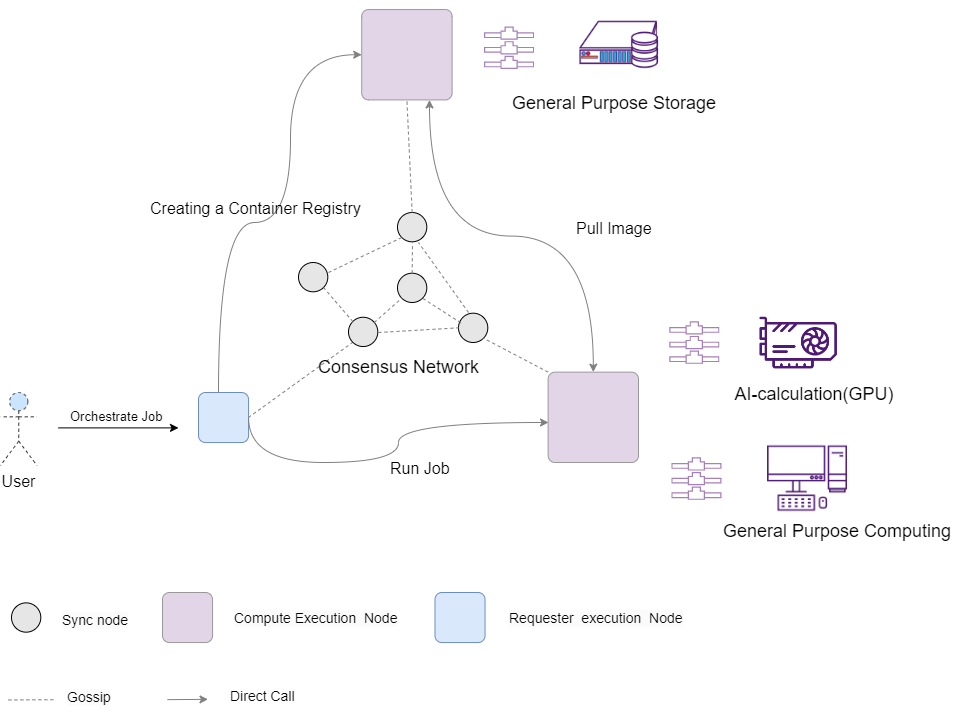

General Computing Market

Users can run any Docker image as a task to build their own applications (AI inference, ML training, analytics, data engineering, data science, DeSci, etc.) through the decentralized general computing market. The decentralized general computing market seeks to change the way large datasets are processed to reduce costs and increase efficiency, and make data processing more accessible to a wider audience.

General Computing Market Protocol Description

The protocol is a trustless, permissionless bilateral computing market where clients can purchase computing services from computing nodes. Trustless means that by default, the protocol does not assume that any particular node is running in a trusted manner, and each node should be considered rational and self-interested (note that this does not include intentional malicious behavior). Permissionless means that any node can join or leave the network at will. The matching of computing jobs is done off-chain, and the generated transactions and results are recorded on-chain. Both clients and computing nodes must agree to the match before becoming a transaction and deposit funds to the protocol to achieve rewards and punishments. The results are verified by "replicated verification", and the client can check the results after any job is completed, but before payment is required. It achieves this by calling the mediation protocol. The mediation protocol is the final source of truth, and the result of the mediation protocol determines how nodes are paid.

• Request Node: Responsible for handling user requests, discovering computing nodes and ranking them, forwarding jobs to computing nodes, and monitoring job lifecycles

• Computing Node: Responsible for executing jobs and generating results. Different computing nodes can be used for different types of jobs, depending on their capabilities and resources.

General Computing Market Protocol Design

Orders: There are three types of orders: bid orders, ask orders, and trade orders. Computing suppliers create ask orders to add storage, clients create bid orders to request storage, and when both parties agree on a price, they create trade orders together.

Order book: The order book is public, and every honest user has the same order book view. If a new order transaction (txorder) appears in a new blockchain block, this new order is added to the order book, and orders are removed from the order book when they are canceled, expired, or settled. The orders are added to the block in the blockchain, so the orders in the order book are all valid.

Order Data Structure Definition: Bid Order: bid=

a) The customer has available funds in their account b) The time is not set in the past c) The computing resources (guaranteed by different storage providers)

Ask Order: ask:=(computing,price) Valid if:

a) The computing resources promised by the computing supplier must be greater than the resources occupied by the order (cpu, memory, etc.).

Deal Order: deal :=(ask,bid,ts) Valid if:

a) Refer to the ask order: it is signed by the customer and is in the order book of the computational market, and there is no other deal order involving it. b) Refer to the bid order: it is signed by the computing supplier and is in the order book of the computational market, and there is no other deal order involving it. c) Ts cannot be set to future time or a very long time ago.

Negotiation Process: The general computing market protocol is divided into two phases: order matching and order settlement.

a) Order matching: Customers and computing suppliers submit transactions to the blockchain to add their orders to the order book. When the order is matched, the customer sends the data to the storage provider, then both parties sign the deal order and submit it to the order book). b) Settlement: The client verifies the calculation results and pays the computing supplier and submits it to the blockchain.

Mechanisms for Decentralized Outsourcing Computation

In order to provide an open market, we introduce MODiCuM [3], a decentralized system for outsourcing computation. It aims to create an open market for computational resources by introducing various decentralized services (such as solvers, resource providers, job creators, mediators, and directory services). Resource owners and resource users trade directly with each other, which can lead to more participation and more competitive prices. MODiCuM resolves disputes through dedicated mediators and imposes enforceable fines to deter misconduct, which is a key problem in decentralized systems. However, unlike other decentralized outsourcing solutions, MODiCuM minimizes computational overhead because it does not require global trust in mediation results.

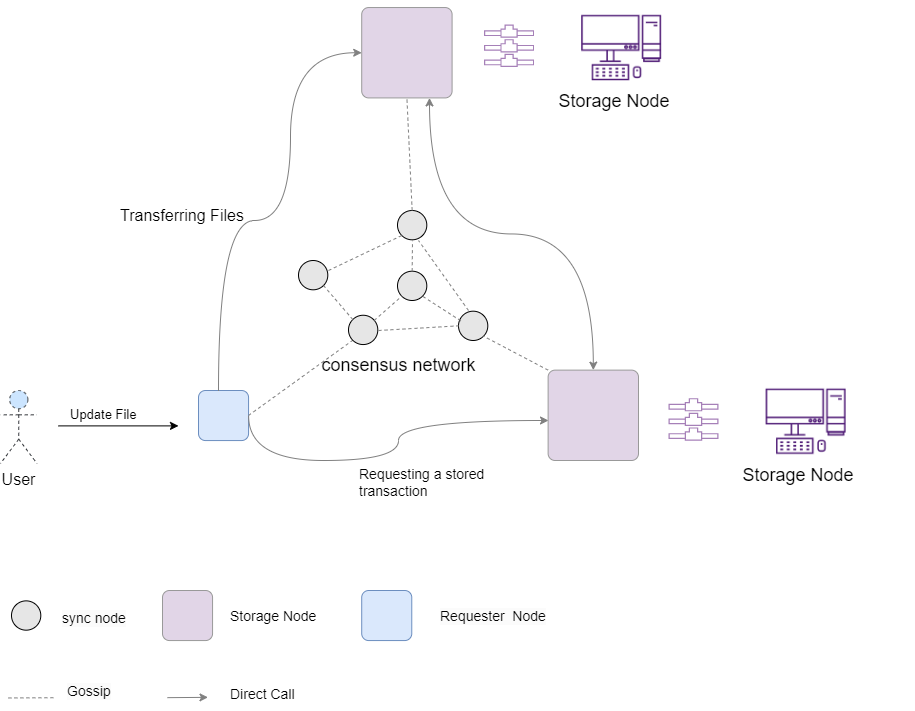

General Storage Market

The general storage market is a verifiable market that allows users to request storage for their own data and also allows storage providers to provide storage resources.

General Storage Market Protocol Description

On-chain order book: Storage provider orders are public, so the network always knows the distribution of storage provider prices, and customers can choose suitable orders according to their storage needs. The customer's transaction price must always be submitted to the order book so that the market can react to new quotes.

Payment method between users and storage providers: In order to avoid storage providers not providing services and users paying for storage fees, the storage fee is not paid in one lump sum, but a part of the funds is obtained by providing continuous proof of storage of the file within the agreed time. The customer must deposit the specified funds into the order to ensure the availability of funds and commitment during the settlement period.

Self-healing of storage failure: Each file is backed up by multiple storage providers, and if a storage provider goes offline, the system will automatically invite new providers to join.

General Storage Market Protocol Design

Orders: There are three types of orders: bid orders, ask orders, and deal orders. Storage providers create ask orders to add storage, and customers create bid orders to request storage. When both parties agree on a price, they create deal orders together. Order book: The order book is public, and every honest user has the same order book view. If a new order transaction (txorder) appears in a new blockchain block, this new order is added to the order book, and orders are removed from the order book when they are canceled,expired, or settled. The orders are added to the block in the blockchain, so the orders in the order book are all valid.

Order Data Structure Definition: Bid Order: bid=

Ask Order: ask:=(space,price) Valid if:

b) The storage provider promises that the storage space must be larger than the storage space occupied by the data in the order.

Deal Order: deal :=(ask[],bid,ts) Valid if:

d) Refer to ask order: it is signed by the customer and is in the order book of the storage market, and there is no other deal order involving it. e) Refer to bid order: it is signed by the storage provider and is in the order book of the storage market, and there is no other deal order involving it. f) ts cannot be set to future time or a very long time ago.

The storage negotiation process involves two stages: order matching and order settlement.

c) Order matching: Customers and storage providers submit transactions to the blockchain to place their orders in the order pool. Once the order matching is complete, the customer sends data to the storage provider, and both parties sign the transaction and submit it to the order pool.

d) Settlement: The storage provider generates and submits file storage proofs to the blockchain to obtain storage fees. At the same time, the rest of the network must verify the proofs generated by the storage provider and repair any possible faults.

File Continuous Storage Proof

The file continuous storage proof [4] scheme allows users to detect whether the storage provider stores outsourced data during the challenge period. Verifiers can verify that the prover stores the outsourced data within a time period. The requirement for the prover is to convince the verifier that P stores data D within a time period t:

(Setup, Prove, Verify)

Setup(1k, D) -> Sp, Sv, where Sp and Sv are setup variables specific to the prover P and verifier V, and 1k is a security parameter. Setup is used to provide P and V with the necessary information to run Prove and Verify. Some schemes may require interaction with the prover or a third party to run Setup.

Prove(Sp, D, c, t) -> πc, where c is a random challenge issued by the verifier V, and πc is the prover's proof of access to the outsourced data D within a time period t. Prove is run by P to generate a proof for V.

Verify(Sv, c, t, πc) -> {0, 1} to check if the proof is correct. Verify is run by V to be convinced that P stores data D within a time period t.

Post Quantum Cryptography Verifiable Consensus

We propose a post-quantum cryptography verifiable consensus protocol, where miners generate verifiable proof of work using a post-quantum cryptography verifiable random function (Post Quantum Cryptography Verifiable Random Function). Miners must continuously generate signatures using their private keys, which prevents miners from forming mining pools because they will not share their private keys to help other miners generate proof of work. To improve blockchain scalability and consensus efficiency, the protocol collects multiple blocks through generations to reduce the number of orphaned blocks, allowing more blocks to be confirmed and reducing network hashrate loss, making the network more secure and giving miners more rewards.

Post Quantum Cryptography Verifiable Random Function

A verifiable random function (Verifiable Random Function, VRF) is a cryptographic scheme that maps an input to a pseudorandom output that can be verified. First, the VRF generates a random number, and second, because it includes the signature of the generator's private key, the verifier can determine the randomness of the number using the public key. VRF has three main features: verifiability, uniqueness, and randomness. The post-quantum cryptography verifiable random function introduces a post-quantum algorithm to ensure that the random number is resistant to quantum computer attacks.

The function PQCVRF_Generate generates adversarial quantum key pairs:

Input: Random selection R [1, n-];

Output: Generate multiple pairs of adversarial quantum keys (skFalcon, pkFalcon), (skDilithium, pkDilithium);

(skFalcon,pkFalcon),(skDilithium,pkDilithium)=KeyGen(R);

The function PQCVRF_Evaluate generates random numbers and proofs:

Input: Message m, private key ski ∈ (skFalcon, skDilithium)

Output: Random number ∂, proof Δproof

Δproof = multiSignatures (sign(skFalcon), sign(skDilithium),m); ∂=Hash(Δproof);

The function PQCVRF_Verify verifies:

Input: Message m, random number ∂, Δproof, pki ∈ (pkFalcon, pkDilithium)

Output: TRUE/FALSE

Verify if ∂= Hash (Δproof) is valid

TRUE/FAlSE = Verify(Δproof, m, {pkFalcon ,pkDilithium})

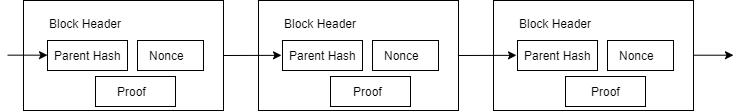

PQC-POW (Post Quantum Cryptography Proof of Work):

by limiting memory. Large amounts of random memory data reads are not only limited by the speed of the computing units, but also by the read speed of memory. • Decentralization: Miners generate fair, confidential, and publicly verifiable proof of work to prevent the formation of centralized organizations such as mining pools, ensuring decentralized security. • Election of Miners: The miner election process is a difficulty calculation of the Quantum-resistant Verifiable Random Function. Miners input a random number R to generate a key pair:

pki, ski= PQCVRF_Generate(R)

Proof of Work Calculation: Continuously add a random number (Nonce) within the block until a value that meets the condition is found; this condition is a difficulty value (Difficulty). Once the result obtained by consuming computing power meets the proof of work, the block will no longer be changed unless all previous work is completed again. As new blocks are continuously added, changing the current block means that all subsequent blocks must be completed again.

The process of generating a proof of work is as follows:

Step1: Obtain the nbit field parameter in the block header, which includes the number of equations and variables in the MQ equation set.

Step2: Generate the coefficient of the equation set head (excluding the Nonce in the block header)

Coefficient of the MQ equation set = shake256XoF (head)

Step3: Generate a verifiable random number Nonce by inputting the private key ski ∈ (skFalcon, skDilithium) and the random number R into the PQCVRF_Evaluate calculation to generate the random number Nonce and the Quantum-resistant proof Δproof

Nonce, Δproof = PQCVRF_Evaluate(ski, R)

Step4: Difficulty calculation. If it meets the condition, output the proof of work.

MQHASH(shake256XoF(Nonce)).bits(0, 9) > threshold

Proof of Work Verification:The verification node verifies the validity of the proof of work by inputting parameters such as Nonce, Δproof, R, and pki ∈ (pkFalcon, pkDilithium).

Verify the proof of work:

Step1: Obtain the nbit field parameter in the block header, which includes the number of equations and variables in the MQ equation set.

Step2: Generate the coefficient of the equation set head (excluding the Nonce in the block header).

Coefficient of the MQ equation set = shake256XoF (head)

Step3: Verify if it meets the threshold. If it meets the threshold, verify the miner's identity.

MQHASH(shake256XoF(Nonce)).bits(0, 9) > threshold

Step4: Verify the miner's identity. By verifying pki, ensure that the proof of work is generated by the miner itself.

TRUE/FAlSE=PQCVRF_Verify(Nonce, Δproof, R, pki)

PQCVRF_Verify can verify the miner's identity, ensuring that the miner can only rely on its own computing power for proof of work calculation, preventing centralized organizations such as mining pools from mining, because the key to the difficulty calculation is the continuous generation of verifiable random numbers (random numbers after signature hash). If a miner wants other miners to assist in the difficulty calculation, they must share their private key with other miners to help generate the signature calculation. For financial security, miners will not engage in sharing their private keys.

Quantum Entanglement Protocol

Quantum entanglement protocol was introduced by Yonatan Sompolinsky and Aviv Zohar in December 2013. The motivation behind the quantum entanglement protocol is that current fast-confirmation blockchains suffer from low security due to the high rate of block obsolescence. This is because it takes a certain amount of time (t) for a block to propagate across the entire network. If miner A mines a block and then miner B happens to mine another block before A's block has propagated to B, B's block will be invalidated and will not contribute to the security of the network.

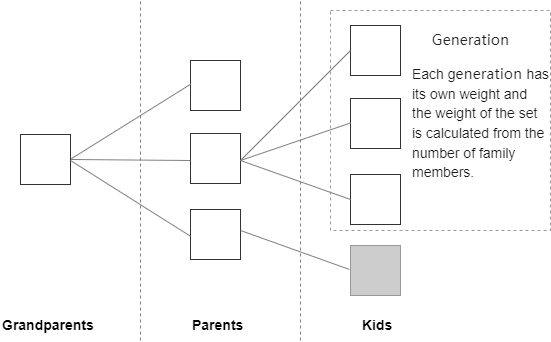

Quantum entanglement protocol in each round of block generation collects multiple blocks and refers to their collection as Generation by considering which chain is "longest" when calculating the weight of a block. This means not only the parent block and earlier ancestor blocks of a block, but also the discarded descendant blocks of ancestor blocks are included. The Generation collection uses a directed acyclic graph (DAG) scheme to ensure no wasted work, thereby encouraging collaboration and increasing the overall chain throughput. Moreover, since Generation requires all its blocks to have the same parent block and be mined at the same height, the chain can quickly converge in cases of forks. Finally, blocks with more computational power are given weight. Over time, miners focus on the heaviest chain, and the lighter chains become isolated.

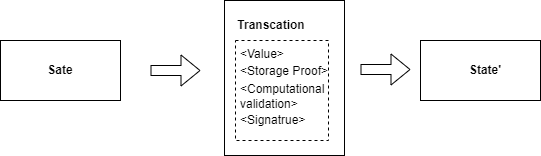

Smart Contracts

Smart contracts allow users to write programs that can spend tokens, request computation or storage, and verify the state transition of computation or storage. Users can interact with smart contracts by sending transactions to the ledger to trigger functionality factors within the contract. We have expanded the smart contract system to support specific operations (such as market operations and proof verification).

Token and Minting

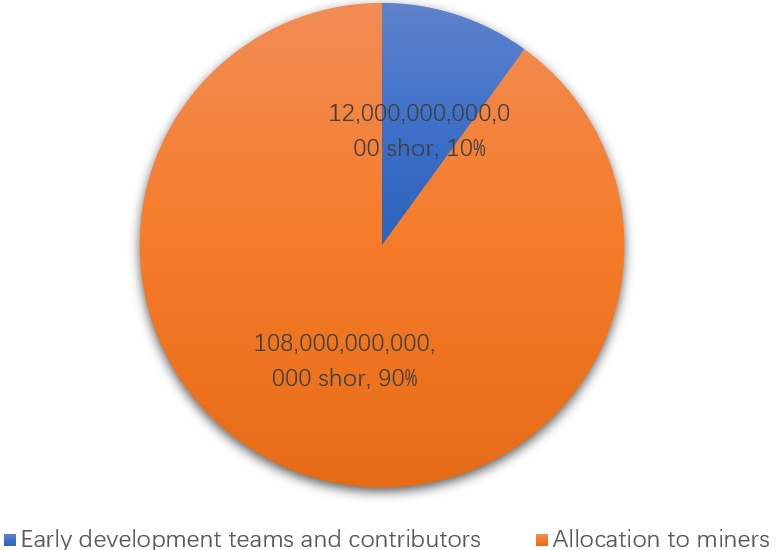

Token Distribution

The token distribution of Qoin is further broken down as follows. A maximum of 120,000,000,000,000 Shor will be created. In the Qoin genesis block allocation, 10% is allocated to the early development team and contributors. The remaining 90% of the tokens are allocated to miners as mining rewards, "for maintaining the blockchain, distributing data, running contracts, etc." The mining allocation will be at the network launch, with the only mining group incentivized being the PoW miners. This is the earliest mining group responsible for maintaining the core functionality of the protocol. Therefore, this group receives the largest share of mining rewards. The total of 90% is allocated to PoW mining.

Token Minting Cycle

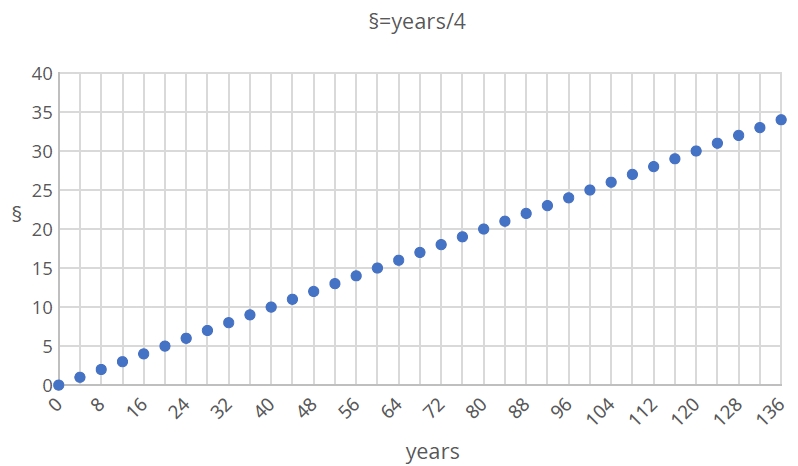

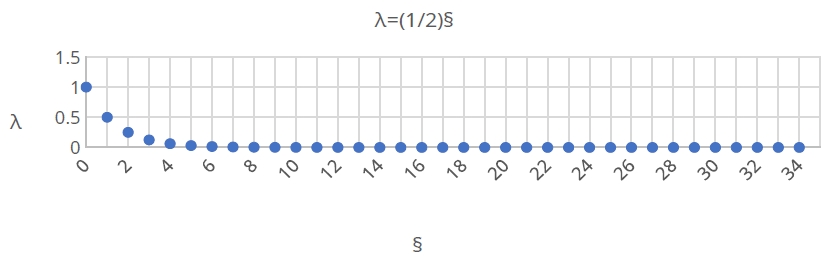

Qoin mints tokens based on a simple exponential decay model, with a total issuance of 120,000,000,000,000 Shor and a continuous minting duration of 136 years, primarily for PoW miner rewards, with the minting amount halving every 4 years.

Total issuance: 120,000,000,000,000 Shor Minting duration: 136 years

§:years(issue year) / 4 § non-negative integers ∈ (0, 1, 2, 3, 4…)

λ (Four-year Period Decay Exponent): (1/2)§

Block reward

Initial block reward: 13.5 trillion Shor per year. The reward is halved every four years until it reaches the currency issuance limit.

Transaction fees and block reward:

After a miner produces a block, they receive two different types of rewards: transaction fees and block rewards. Transactions require a fee to be paid to the miner. In addition to the transaction fee, the miner also receives a block reward. The block reward will be halved every four years until 2160, when the total currency issuance reaches 120 trillion, at which point issuance will stop. However, considering the miner incentive and the consumption of the currency, a corresponding reward strategy will be adopted to ensure the long-term stability of the total currency volume.

Blockchain as Services

Decentralized Web3.0 Browser (Browser as a Block Node)

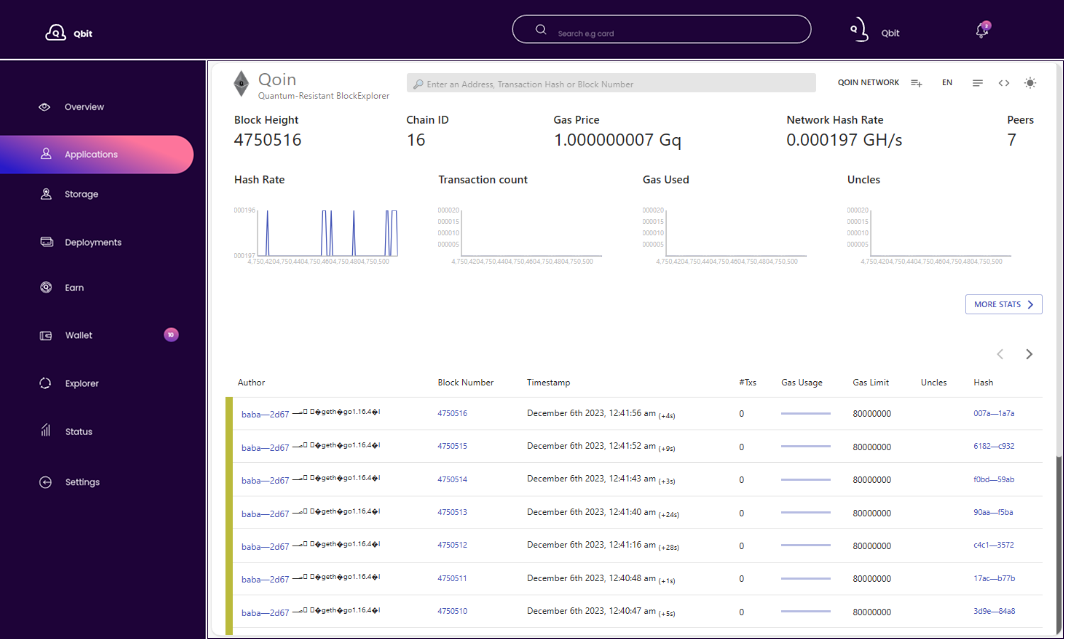

In the current Web3.0 application scenario, the blockchain network and the browser are separated, and the browser needs to bridge the node to access the blockchain data, which brings obstacles to ordinary users accessing Web3.0. Binding the blockchain data node with the browser kernel makes software applications able to store distributed network node data, and also has a web browser access to achieve a truly decentralized browser, providing users with front-end and back-end integrated services to lower the user access threshold. The browser client is shown in the figure.

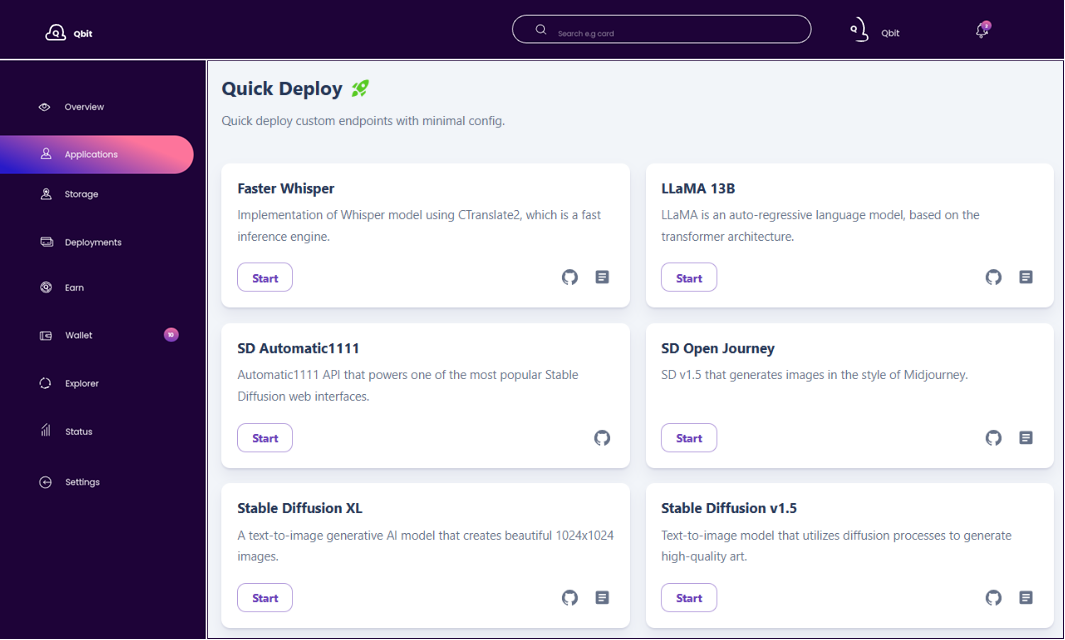

Fast Training of AI Models

Currently, most people are using public AI intelligent models, which poses a significant security and privacy issue because users may ask various questions to AI, including daily work assistance questions, personal privacy questions, emotional questions, and intelligent customer service questions. These questions may result in the leakage of company business secrets, personal information, and personal emotional problems, becoming a tool for profit. Training your own personal AI model on the DQASN network can effectively prevent the leakage of important user information because it is a decentralized network that can protect user privacy well, and it is easy to use: users can quickly train AI models by selecting smart AI models, or choose AI model fine-tuning training, or even AI large model training. The user deployment interface is shown in the figure.

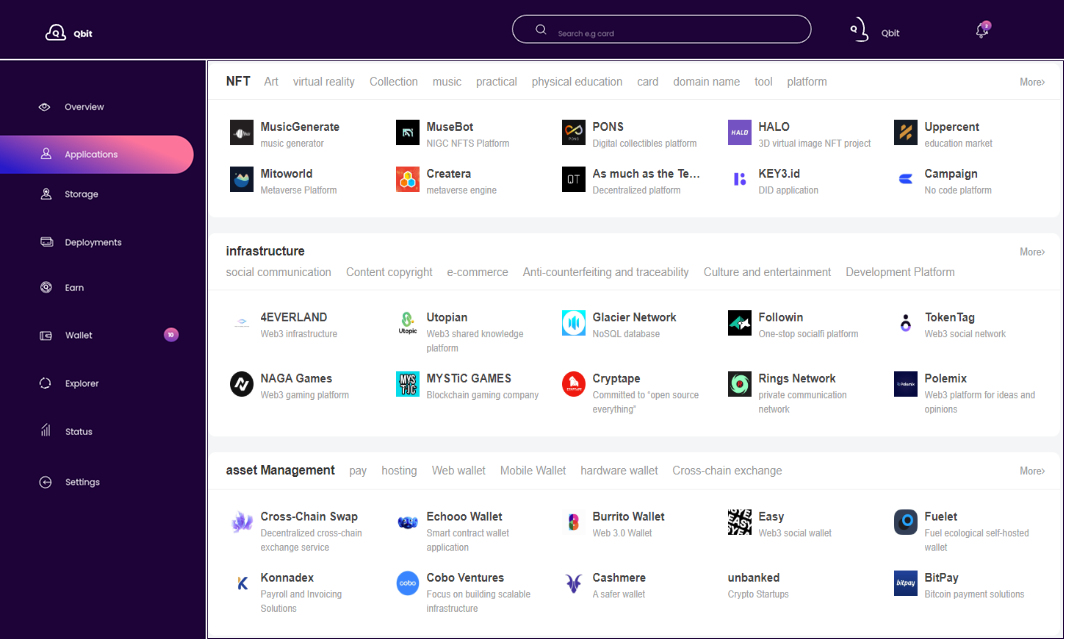

Decentralized Application Release

Allowing users to easily create their own Web3.0 applications, such as publishing their own decentralized personal website, building a mall, etc. Users can also freely share their decentralized applications and build a free application community.

Future Work

Our team provides a clear and long-term roadmap for the construction of the Decentralized Quantum-resistant AI Supercomputing Network (DQASN); however, we also believe that this work is the starting point for future research on the Decentralized Quantum-resistant AI Supercomputing Network.

• Issue a stable currency anchored to computer hardware facilities (storage devices, computing devices, etc.), mainly for the settlement of computing or storage services and other asset transactions. Computing and storage devices are relatively stable commodities with relatively low market volatility, and are in long-term use, making them high-quality assets as value anchors. A stable settlement currency can ensure controllable costs for users during the use of services, and is the core foundation for reaching transactions.

• Build a decentralized application market ecosystem to provide developers with creative, development, distribution, operation, and analysis services throughout the entire lifecycle, providing developers with rich tools, capabilities, resource support, and full-stack solutions.

• Provide the underlying blockchain basic services for the decentralized physical infrastructure network, including basic hardware facility access (covering AI computing hardware, storage hardware, general-purpose computing hardware, shared cars,shared charging piles, etc.); digital assetization; basic hardware facility service settlement, and the construction of a decentralized infrastructure trading market.